What do we do if we don’t find an appropriate component library for our projects and requirements? We build one!

- Autorin

- Lilla Fésüs

- Datum

- 10. Februar 2021

- Lesedauer

- 8 Minuten

When one of our customers pitched us their idea about a whole universe of branded applications, including websites, mobile apps and desktop webapps, we stood before the challenge to create a customized and reusable component library that would also double as a living style guide documentation for any future developments. Write once, run and use anywhere. In the world of web components, micro frontends, monorepos and npm we knew it will not be a problem from the technical point of view, but we needed a workflow for it.

We need a workflow

The biggest challenge with the development of our UI library was that we needed to build it as part of the “parent” projects. It would grow as we implement the “main” features. This, however, meant a circular dependency: the project needed the library, and the library needed the project.

The npm way

First, we came up with the following workflow based on the classical way of publishing the library as an npm package, but implementing the component in the parent project:

- implement button in parent project

- once ready copy it over to the UI library and create a merge request

- once the merge request in the UI library is merged, and the package is published, install/update it in the parent project and replace the button usage with the installed one

- create merge request in the parent project

However, the word “copy” rang a warning bell, and the replacing step sounded way too complicated, so we’ve turned it around and sketched another way:

- implement button in the UI library

- install it locally in the parent project and test it, if necessary repeat from step 1

- once ready create a merge request in the UI library

- once the merge request in the UI library is merged, and the package is published, install/update it in the parent project

- create merge request in the parent project

Review apps

At this point it is important to mention that with each merge request we also deploy a review app. Review apps provide an automatic live preview of changes made in a feature branch by spinning up a dynamic environment for the merge request and allow designers and product managers to see the changes without needing to check out the branch and run the changes in a sandbox environment. The precondition of a review app is clearly a successful build, which again requires the installation of the npm packages. However, if the UI library package is not yet published, it won’t be available in the job, so the pipleine fails.

Monorepos

I know that your first reaction to the above described problem would be to use monorepos, and we also discussed the topic a hundred times already, believe me. We had workshops, round tables, researches, listed up the pros and cons and in the end we’ve decided against monorepos. To sum it up: there is no silver bullet. The applications have other than the UI library no strong connection to each other, and we wanted to keep it that way. We also wanted to have separate release trains, and the freedom to decide about updates and do not force older maintenance application to update, when the customer doesn’t want it. I will not go into further details as it goes beyond the scope of this blog post. If you can or are using monorepos, you might even stop reading here, because you will have a completely different journey, face other problems and tackle other obstacles.

The subtree way

Our first impression of the above described workflow was that there are too many dependencies and merge request juggling, blocked features and issues until the merge request in the UI library is not merged and generally speaking: just a lot of chaos. And let’s be honest. Unless you are a masochist, self punishing, hopeless developer tied to a desk in the basement being told what you are allowed to do, you try to avoid chaos, and try to come up with a comfy and lazy solution. So that’s how we reinvented the wheel!

We wanted to treat the UI library as part of one parent project but also share it with other projects at the same time. As soon as the library becomes stable enough, we would publish it to npm, adjust some import paths and remove it from the projects. One of our senior developers told us - haunted by bad memories in his voice - that we could use Git submodules for this, but we just simply shouldn’t. This thought-provoking impulse however enlightened the way to the - at the time believed - Holy Grail: Git subtrees.

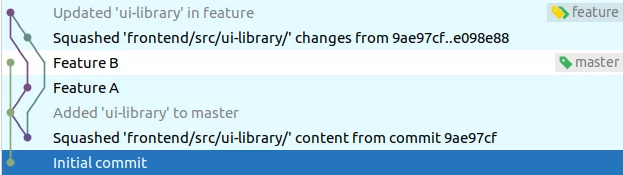

git subtree lets you nest one repository inside another as a sub-directory. That way the code from the ui-library project would be immediately and directly available in the project/frontend/src/ui-library sub-folder. Furthermore, changes within the ui-library sub-folder would be directly committed to the ui-library Git repository and the history would grow with the main development project.

All that glitter is not gold. Git subtree worked only in theory so smooth. As we started to use it, we realized some big drawbacks.

Pitfall with rebase

We use company-wide fast-forward merges with squashed commits as merge method, so before you can merge your feature branch into master, it needs to be rebased onto master. However, we were not aware of a pretty nasty pitfall in the combination with subtrees. If you update your subtree in the branch and then rebase or squash the merge commit during merging, you lose the path information. Let me demonstrate it.

Imagine, you start working on Feature A and your colleague on Feature B. He needed a button for his Feature B and therefore added it to the UI library. After his feature was finished, you realize that you also need the button for your Feature A, so you update the UI library in your branch.

Everything is fine, you are finished, and would like to create a merge request that is ready to be merged into master. For this you rebase your branch onto master. Baam! You’ve lost the merge commit and the path information. Therefore, the newly added button is not in frontend/src/ui-libray but in the root folder.

Alright, we could avoid this to happen with some regulations, but it simply smells.

Dependency hell

Then we’ve arrived to the dependency hell. Since the ui-library sub-folder does not have its own dependencies the parent projects needed to take care of the installation. That sounds crazy, right? Why would I install Angular Material in the main projects when they will never use it directly? Why should I need to update Angular when the version in UI library have changed?

Missing CLI and tooling

The missing dependencies led to another problem. We had no CLI and tooling in the ui-library sub-folder. The UI library was already an Angular project, because we developed it under the premise to publish it once it gets stable. So when we wanted to add a new library with ng g l, we wanted to add it to the UI library’s angular.json and tsconfig. However, the parent project’s CLI did not know anything about the sub-folder’s structure, and it could only insert the path to its own tsconfig.

Our first idea was to install the Node modules in the ui-library sub-folder, switch to this folder whenever we need the CLI, and switch back to the parent folder for build. Little did we know about the path resolution in Node.js at that time. According to the documentation:

If the module identifier passed to require() is not a core module, and does not begin with '/', '../', or './', then Node.js starts at the parent directory of the current module, and adds /node_modules, and attempts to load the module from that location.

For example, if the file at '/home/ry/projects/foo.js' called require('bar.js'), then Node.js would look in the following locations, in this order:

/home/ry/projects/node_modules/bar.js/home/ry/node_modules/bar.js/home/node_modules/bar.js/node_modules/bar.js

Aaand we were back at the dependency hell with errors like CommonModule declared in /usr/src/app/src/frontend/ui-library/node_modules/@angular/common/common.d.ts is not exported from @angular/common.

Missing quality assurance in CI/CD

If this hasn’t been enough, the last drop was the missing CI/CD. Tests did not run for the ui-library sub-folder, because it wasn’t declared in the parent project’s angular.json. It required its own stages and jobs, and it did not run in the project specific pipelines, only when we pushed back to the UI library’s repository.

Long story short: it was a complete mess. At this time we realized that if this would be the easier way, it would already be standard. We went back to the drawing table and caved in: we will use npm packages.

Back to the roots

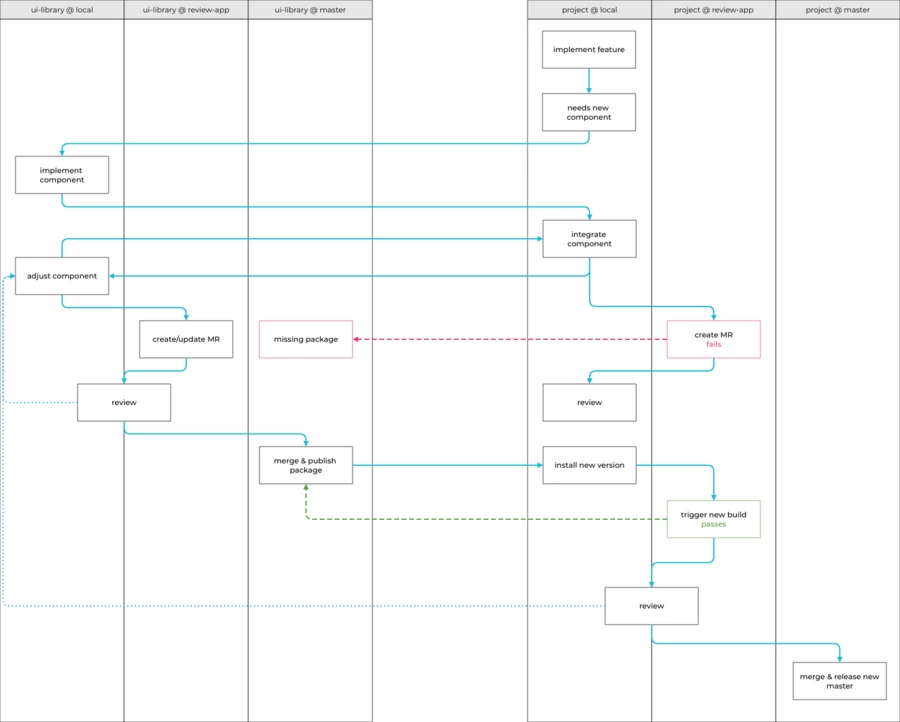

After our excursion in hell’s nine circles we’ve returned to the classic npm way and established the following workflow.

- implement feature locally

- realize you need a component

- implement the component in UI library locally

- integrate the component in the project locally

- adjust the component in the UI library locally and repeat from step 4 if necessary

- when you finish, create a merge request for UI library and project

- the pipeline for the project will fail due to the missing package

- start the review for the UI library locally or remotely (e.g. a designer) and for the project only locally and repeat fom step 5 if necessary

- even though the review app for the project will not work at this step, an early review saves time and can even result in changes required in the UI library

- merge the UI library’s merge request and automatically publish the package

- install the new version locally

- push it and trigger a new build that will now pass

- review the project once again locally or remotely (e.g. a product manager) and repeat from step 5 if necessary

- merge the project’s merge request and release new master

Installing local packages within Docker

Short answer: you cannot.

Another company-wide praxis is the usage of Docker images for the front-end development, so that we always have the correct Node version, npm and yarn and other tools like Lighthouse or BackstopJs. Once again, not going into details behind this decision. The different projects and the UI library are started in different Docker containers, so you cannot use symlinks and therefore npm link.

npm link creates a symlink in the global folder {prefix}/lib/node_modules/<package> that links to the package where the npm link command was executed. Next, in some other location, npm link package-name will create a symbolic link from globally-installed package-name to node_modules/ of the current folder. But since you are in different docker containers the symlinked global package in one container will not be available in the other one.

We also thought about symlinking the folders manually or synchronizing them with rsync, but we ran into other issues (Link1, Link2 did not work, Link3).

At the end our “integrate the component in the project locally“ step looks like this:

- build the UI library

- copy the

distfolder into the project’snode_modulesfolder- I personally wrote a zsh command for it so it is not as bad and slow as it sounds

Conclusion

Our workflow isn’t flawless. The failing pipeline and missing review apps in the projects until the changes in UI library are not released is an unsolvable circular dependency we need to live with. However, the local integration of the component in the project by copying definitely worth another shot, because automation is simply awesome and efficient.

At the end we did not reinvent the wheel and did not find the Holy Grail either, but we had a highly instructive and extremely educational journey, where we’ve learnt from our mistakes and were able to step back and admit our mistakes. If not for our laziness and think-outside-the-box thinking, we wouldn’t have learned about subtrees, the path resolution of Node.js or symlinks in Docker.

In Part 2 I’m going to write about our supportive tooling, like Storybook, Zeplin and InVision, and also give you an explanation why we do not use Bit or Lerna (yet). Stay tuned!